FACEBOOK TIMELINE

A History of Prioritizing Growth Over Safety

Facebook platforms are home to the majority of crime content that ACCO experts track. Examining Facebook's history, our researchers started seeing a pattern in how the firm has responded to public reports about illicit activity and other problems on its platforms. What we found is that Mark Zuckerberg's approach to toxic and illegal content and behavior hasn't changed much over the years.

The purpose of this timeline is to bring together all the relevant history of Facebook's approach to illicit content, and to trace how Zuckerberg has responded to dangers that his products cause, dating back to the very start, when social media was invented in a Harvard dorm.

"Mark Zuckerberg" by Alessio Jacona is licensed under CC BY-SA 2.0.

02/04/2004

08/2004

Facebook Gets $500,000 Investment from Peter Thiel

By June of 2004, Zuckerberg had decided to put a pause on his Harvard career, dropping out to travel to California to secure investments and work on Facebook full time. He soon met with venture capitalist and PayPal co-founder Peter Thiel. By August 2004, Thiel would become Facebook's first outside investor, putting $500,000 into the fledgling start-up.

Learn more about Peter Thiel.

5/26/2005

Facebook Receives $13 Million in Funding from Accel Partners

Facebook’s growth rate quickly attracted another high-profile investor. Venture capitalist Jim Breyer at Accel Partners was so taken with thefacebook.com that in May 2005, he invested $13 million in the startup, which by then had 2.8 million users across 800 campuses. ''It is a business that has seen tremendous underlying, organic growth and the team itself is intellectually honest and breathtakingly brilliant in terms of understanding the college student experience,'' Mr. Breyer said. Zuckerberg, at the time, was 21-years-old.

Facebook Opens Membership

On September 26, 2006, Facebook opened to anyone 13-years-old and up with a valid email. This led to a flood of new users – 12 million by the end of the year – but made it hard for moderators to know who was a real person. The customer support team had grown from 5 to about 20 people, and was then managed by a corporate customer service specialist who had no experience in tech and no college degree, according to Katherine Losse in The Boy Kings. As the workload expanded, she wrote, “he began pressuring us to hire the least educated people he could find.” The irony of calling the team “customer service,” was that Facebook users were never the firm’s clients; advertisers were.

11/2008

Facebook Makes First Stab at Content Policy

By 2008, Facebook had 100 million users, but there was still no policy-driven approach to moderating Facebook. A 2019 Vanity Fair report quoted an early employee as saying content moderation amounted to little more than removing “Hitler and naked people,” plus “anything else that makes you feel uncomfortable.”

That year, Dave Willner was hired as Facebook’s head of content standards. He started looking through 15,000 photos a day and removing anything that made him uncomfortable. Willner would go on to write Facebook's first "constitution," a set of rules called the Community Standards.

05/2009

Russian Oligarch Yuri Milner Invests $200M

In May 2009, Facebook announced an investment of $200 million by Digital Sky Technologies, an investment firm founded by Russian oligarch Yuri Milner. Behind Milner’s investments was Gazprom Investholding, a Russian government-backed financial institution. Gazprom Investholding’s parent company, Gazprom, has been under US sanctions for Russia’s support of Ukrainian separatists since 2014. Ilya Zaslavskiy, a contributor to the Kleptocracy Initiative, a project of the Hudson Institute, a conservative think tank in Washington, has pointed out that Gazprom is “used for politically important and strategically important deals for the Kremlin.” Milner has also been tied to alleged Russian organized crime figures.

05/2010

Amid Privacy Complaints, Facebook Announces First Pivot to Privacy

Amid mounting complaints from users wanting better control over their personal data, FB announced it would implement new privacy features to give users more capacity to decide what information about them was broadcast by the News Feed. “Over the past few weeks, the number one thing we’ve heard is that many users want a simpler way to control their information,” Zuckerberg wrote in a blog post. “Today we’re starting to roll out changes that will make our controls simpler and easier.”

The next month, Facebook reached 500 million registered members, the equivalent of connecting with eight percent of the world’s population. Half of the site's membership used Facebook daily, for an average of about half an hour.

01/02/2011

Accelerating Growth, Facebook Raises $500M from Goldman Sachs

In 2011, Goldman Sachs invested $500 million in Facebook, and as part of the deal, Goldman reached out to its private wealthy clients, offering them the chance to invest as well. This was estimated to raise another $1.5 billion. At the time, Facebook was a private firm that traded shares only on secondary markets. It was the second-most accessed website in the U.S. behind Google, according to Experian Hitwise.

03/2012

07/12/2012

10/14/2012

02/19/2014

01/2015

10/06/2015

UN Investigators Say Facebook Played a “Determining Role” in Rohingya Genocide

In March 2018, the United Nations declared that Facebook had played a “determining role” in the targeting killings, rapes and forced migration of Myanmar’s Rohingya, a Muslim minority group.

Long an isolated, military-ruled backwater where information had been tightly controlled, Myanmar began opening up to the world following 2012 elections, allowing Burmese people access to mobile phones and the Internet for the first time ever. By 2015, 90% of the population had a mobile phone with Facebook already installed and free to use. For many Burmese, Facebook was all they knew of the world wide web.

But instead of becoming a force for more openness, the Myanmar military would turn Facebook into a tool of ethnic cleansing, setting up fake accounts used to spread hate content and misinformation that targeted the Rohingya. By 2017, a deadly crackdown by the army sent hundreds of thousands of Rohingya fleeing across the border into Bangladesh. The United Nations later described it as a "textbook example of ethnic cleansing."

Zuckerberg responded that what was happening in Myanmar was a “terrible tragedy, and we need to do more.”

When Wired magazine asked how many Burmese language speakers were on their moderation team, Facebook was evasive, citing that they had 7,500 moderators who spoke 50 different languages. Reports later suggested the firm employed just one moderator able to speak Burmese.

03/21/2018

Facebook Investors Sue in Wake of Cambridge Analytica Scandal

The lawsuit said Facebook "made materially false and misleading statements" about the company's policies, and claimed Facebook did not disclose that it allowed third parties to access data on millions of people without their knowledge. A New York Times report said that Zuckerberg and his deputy Sheryl Sandberg, both of them “bent on growth” had ignored warning signs their platform had become a dangerous tool, and then sought to conceal the problems they caused from public view.

04/04/2018

FDA Chief Demands Tech Firms Help Police Fight Opioid Crisis

Amid an addiction crisis killing more than 60-thousand Americans a year, "internet firms simply aren't taking practical steps to find and remove these illegal opioid listings,” said FDA Commissioner Scott Gottlieb. “There's ample evidence of narcotics being advertised and sold online.”

04/06/2018

Opioid Activist Gets Instagram to Block #Oxy Content

After reporting Instagram accounts selling oxycontin for years, Eileen Carey, an ACCO expert reached out to Facebook VP Guy Rosen and convinced him to remove search results for the hashtag #oxycontin. He did it inside one 8-hour work day. "In a message to Rosen, Carey thanked him for taking down the hashtag #oxycontin, but noted that about 16,000 posts with the hashtag #fentanyl remained on Instagram. “Working on it,” Rosen responded.

04/11/2018

SESTA-FOSTA Makes Big Tech Liable for Hosting Sex Trafficking Content

SESTA-FOSTA was signed into law by President Trump on April 11, 2018, becoming the first liability carve-out of Section 230 of the Communications Decency Act, and making internet companies legally liable for hosting sex trafficking content on their platforms.

The bill followed the arrest of seven executives a Backpage.com, an Internet site that was found guilty of facilitating sex trafficking and money laundering. Internal emails proved that Backpage advised clients to remove words like “teen,” “young,” or “fresh” from their ads, while allowing the ad itself to remain online.

08/14/2018

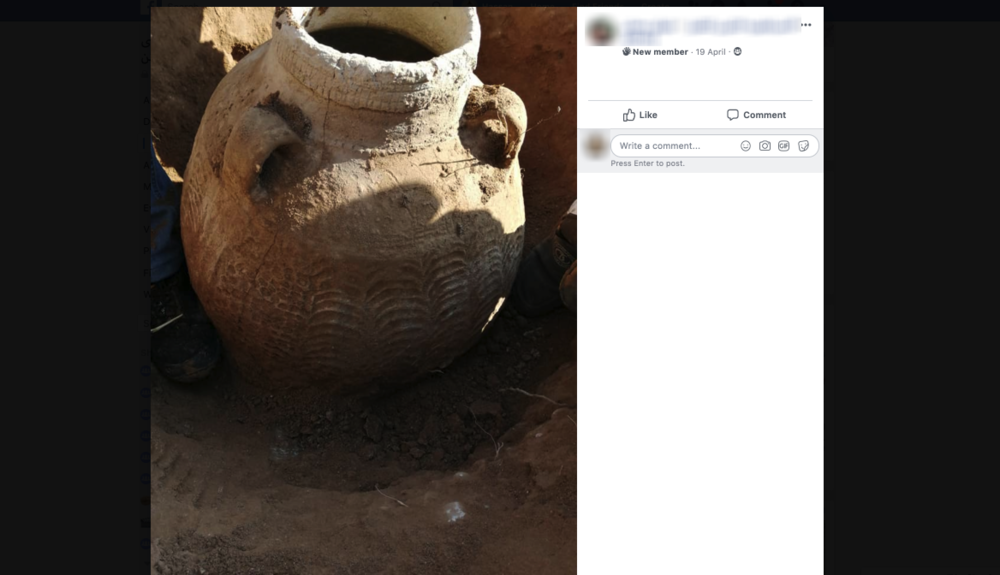

Facebook “Made It Easier" Than Ever to Traffic Antiquities

ACCO Founding members Katie Paul and Amr al Azm reported that Facebook “made it easier than ever to traffic Middle Eastern antiquities.” Facebook, they wrote, “is the most high-profile of the social media platforms that have been used as vehicles for the sale of illicit artifacts; others include WhatsApp, Telegram and Viber. Antiquities traffickers use these platforms to evade the authorities and circumvent regulations imposed by online auction and e-commerce sites.”

09/25/2018

Researchers Say the Majority of Drug Content Is Still Not Removed

A Washington Post report exposed how a simple search could locate thousands of drug dealers in seconds, and how algorithms connected buyers and sellers. In response, Facebook posted a statement claiming “there is no room" for drugs on their platforms, arguing they blocked certain search terms and made it easy for people to flag drug content. But ACCO researchers and whistleblowers reported the majority of drug content continued to stay up.

12/30/2018

UN Says Facebook Enables Human Smugglers

The United Nations warned that Facebook and WhatsApp were 'enabling criminal activity' by hosting content posted by human traffickers and people smugglers who lure 'migrants to their deaths,' according to news reports. "Facebook has people working on this but it’s nothing compared with the impact,” said Leonard Doyle, spokesperson for the International Organization for Migration (IOM). “The amount of attention this gets compared with the damage it does is microscopic."

01/30/2019

Facebook Deploys Misleading Semantics About Fake Accounts

Facebook’s estimates of the number of fake users on its platforms had oscillated wildly in the firm’s reports to the Securities and Exchange Commission. A New York Times analysis discovered that the firm was only citing estimates of the number of fake users Facebook believed to remain active on its platforms. The firm’s declarations to the SEC failed to include the number of fake accounts the firm removed each quarter, which represented an astounding 25-35% of Facebook’s user base.

Facebook also claimed that it’s moderation teams and tools were finding and removing the majority of fake accounts. However, the New York Times reporter himself created 11 fake accounts, and Facebook caught none of them, until the reporter himself flagged them to the firm.

Fake accounts are problematic because they can be used to participate in criminal activities and spread false information. Governments, such as in Myanmar, have also been involved in creating fake accounts. And since Facebook stock value and advertising revenue are directly tied to the number of active users, having such a high rate of fake accounts could impact the firm's bottom line.

“The fact there are so many unexplained differences and so many gaps, it paints a picture that, when you combine all these factors together, it would indicate there’s something going on here that they’re trying to hide,” said Aaron Greenspan, who tracks Facebook and has issued his own report on fake users.

03/14/2019

Facebook Executive Chris Cox Resigns Over Disagreement About Illicit Content

Facebook's chief product officer Chris Cox resigned, reportedly because he felt encrypting the platforms would create too much risk, and foster a sharp increase in illicit activity. “It is with great sadness I share with you that after thirteen years, I’ve decided to leave the company,” he wrote.

03/20/2019

Facebook Beefs Up Moderator Force

Scrambling to contain outrage in the wake of the Christchurch attack, Facebook publicly acknowledged that it employed more than 15,000 contractors and employees reviewing content, part of a 30,000-person department working on safety and security issues at the company.

05/02/2019

Exposé Released on the Illicit Antiquities Trade on Facebook

In May 2019, BBC News broadcast an exposé about the illicit antiquities trade on Facebook, and the undercover work of ATHAR Project archeologists to track the crisis. A month later, ACCO member ATHAR Project released their groundbreaking study, revealing that some 200 groups, with more than two million active members, existed on Facebook, where looted antiquities were marketed and sold.

Read the ATHAR Report.

05/08/2019

Facebook Moderators Push for Better Wages and Health Services

Facebook moderators, the vast majority of them contractors with starting wages 14% of the median Facebook salary, began to push for better wages and mental health services. A week after The Washington Post reported on their campaign, Facebook raised wages and benefits for moderators. “Content review at our size can be challenging and we know we have more work to do,” FB said in a statement.

05/23/2019

Facebook Releases Its First Transparency Report

After claiming there was “no place” for drugs, terror or fake profiles on Facebook, the firm admitted to removing 3B fake accounts between October - March, as well as 11 million pieces of terror content and 1.5 million posts tied to selling drugs.

06/21/2019

U.K. Says Facebook Allows “Thriving Marketplace” for Fake Reviews

The United Kingdom's Competition and Markets Authority (CMA) found “troubling evidence that there is a thriving marketplace for fake and misleading online reviews” on Facebook and eBay. The CMA found 26 Facebook groups in which members were involved in buying and selling fake reviews. “Billions of pounds of people's spending is influenced by reviews every year,” according to the CMA, which pressured the tech companies to curb fake review transactions on their sites. Fake or misleading reviews are illegal under consumer protection laws in both the U.K. and the United States.

According to a follow up report six weeks later by Which?, the U.K. equivalent of Consumers Reports, not much had changed on Facebook. “Our latest findings demonstrate that Facebook has systematically failed to take action while its platform continues to be plagued with fake review groups generating thousands of posts a day.”

07/24/2019

Facebook Fined $100M by SEC Over Cambridge Analytica Scandal

Facebook agreed to pay the US Securities and Exchange Commission a $100 million fine for making "misleading disclosures" about the risk of abusing users' data. The SEC case hinged on the fact that Facebook knew about the data breach in 2015, but continued to describe possible data breaches in purely "hypothetical" terms to investors. Facebook also didn't have "specific policies or procedures" in effect to make accurate disclosures in light of the results of the investigation.

10/23/2019

Zuckerberg Grilled About Child Sex Exploitation in Congress

Testifying before the House Financial Services Committee, Zuckerberg was assailed by Rep. Ann Wagner over reports that 16.8M of the 18.4M reports sent to the national cyber tip line on child sex exploitation were from Facebook platforms. In response, Zuckerberg claimed to Senators: "The reason why the vast majority come from Facebook is because we work harder than any other company to identify this behavior."

12/05/2019

Former Moderators Sue Facebook For Giving Them PTSD

"My first day on the job, I witnessed someone being beaten to death with a plank of wood with nails in it and repeatedly stabbed," said one. "Day two was the first time for me seeing bestiality on video — and it all escalated from there.”

As low-paid outsourced workers, content moderators claimed that they suffered from “psychological trauma” as a result of poor working conditions and a lack of proper training to prepare them for viewing some of the most horrific content seen anywhere online. One plaintiff hoped that better care and working conditions would improve the quality of moderation decisions and impact on society.

The firm now employed 40,000 moderators. Read more.

04/10/2020

Facebook Deploys the 99% Line Again, This Time About Child Sex Exploitation Material

After Facebook shareholders demanded the firm respond to widespread reports of rampant child sex abuse material on Facebook platforms, the firm claimed in an SEC filing that “Generally, we proactively detect over 99% of the content we remove for violating [child sex exploitation] policy."

5/29/2020

President Trump Signs EO Limiting Legal Protections for Tech Industry

President Trump signed an executive order targeting the broad legal immunity tech firms enjoy for content posted by users. Primarily focused on alleged political bias in content removal, the order also created a space for victims of online crime to seek justice against Internet companies. As the executive order was signed, Attorney General William Barr also announced the Justice Department was drafting legislation for Congress to revise liability protections for the tech industry.

6/17/2020

US Department of Justice Proposes Limits to Tech Industry Protections

The US Department of Justice (DOJ) announced a proposal that would incentivize Internet companies to be more aggressive about removing illicit and harmful content, as well as encouraging the companies to be more transparent about content removal. The proposed changes would remove a liability shield for tech firms that enabled activity such as online scams, drug and human trafficking, and other activities that violate federal law.

08/05/2020

Online Wildlife Trafficking Increasing in Every Country, According to WWF

In 2018, Facebook had pledged to reduce illicit wildlife markets on its platforms by 80% by 2020. Instead, a 2020 report by World Wildlife Fund (WWF) found the opposite had happened.

“It’s increasing in every country,” said Jedsada Taweekan, a WWF regional manager, adding that the volume of wildlife products sold online had approximately doubled since 2015.

A July 2020 study by TRAFFIC documented that the weekly average number of ivory items rose by 46.3% and the number of posts climbed by 74.8% in 2019, compared to 2016. Facebook only removed the posts and accounts after the report was published.

08/13/2020

Are you angry about what the Internet has become? Join our fight by making a donation!

In the last year, ACCO’s mission to counter online crime has become more vital than ever. Since the COVID-19 lockdown started, there’s been a 75% surge in online crime, a shadow pandemic happening in the devices that have become our lifeline to the world. Identity theft, wildlife crime and deaths from opioids have all doubled, but perhaps most horrifying, there’s been a 300% increase in reports of child sex abuse content. Meanwhile, Facebook continues to deflect any responsibility for the public’s safety.

Are you angry about what the Internet has become? Help us take on Facebook and make a donation!

Growth in Criminals Broadcasting on Facebook Live

Law enforcement across the globe began to contend with a growing number of rapists, child abusers, and murderers who began broadcasting their crimes via Facebook Live or posting them on social media.

Posting crime on social media became a way for criminals to brag, thus gaining a sense of self-importance. This may seem foolishly self-defeating, but according to media psychologist Pamela Rutledge, the sheer size of the social media audience was “perhaps, more seductive to those who are committing antisocial acts to fill personal needs of self-aggrandizement.”

05/01/2017

UK Lawmakers Call for Fining Social Media Companies Over Terror, Hate Content

An inquiry by the British House of Commons Home Affairs Committee condemned social media firms for failing to tackle hate speech and terror content, proposing a system of escalating sanctions, to include “meaningful fines” for tech platforms that fail to remove illegal content within a strict time frame. “This isn’t beyond them to solve, yet they are failing to do so,” said the report. “They continue to operate as platforms for hatred and extremism without even taking basic steps to make sure they can quickly stop illegal material, properly enforce their own community standards, or keep people safe.” The British Prime Minister’s office said firms like Facebook “can and must do more” to remove inflammatory material from the web but added that it was up to them to respond to public concern. Facebook responded that, “There is absolutely no place for terrorist groups on Facebook and we do not allow content that promotes terrorism on our platform.” The firm refused to divulge to UK authorities the number of moderators it used to police content.

05/03/2017

Amid Uptick in Murders, Suicides, Zuckerberg Expands Moderator Force

In May 2017, following a spate of suicides and murders that were streamed or hosted on Facebook for hours before being taken down, Zuckerberg announced plans to almost double his moderator force from 4,500 to 7,500. “These reviewers will also help us get better at removing things we don't allow on Facebook like hate speech and child exploitation.” He said the firm was also “building better tools to keep our community safe.”

11/01/2017

Zuckerberg Promises Investors Major Investments in Security

In a November 1 earnings call, available here, Zuckerberg promised that, “We're doing a lot here with investments both in people and technology. Some of this is focused on finding bad actors and bad behavior. Some is focused on removing false news, hate speech, bullying, and other problematic content that we don't want in our community.” He said he was doubling his 10,000-stong team “working on safety and security” in the coming year as well as “doubling or more our engineering efforts focused on security. And we're also building new AI to detect bad content and bad actors -- just like we've done with terrorist propaganda.” He went on to acknowledge this spending would cut into earnings, but insisted that, “protecting our community is more important than maximizing our profits.” He said the firm was also working to expand Facebook Marketplace, as well as video streaming.

01/04/2018

Zuckerberg’s 2018 New Year Resolution: “Fix Facebook”

In a Jan 4 blog post, he acknowledged that his firm had “a lot of work to do -- whether it's protecting our community from abuse and hate, defending against interference by nation states, or making sure that time spent on Facebook is time well spent.” The Facebook founder said it would be “my personal challenge ... to focus on fixing these important issues. We won't prevent all mistakes or abuse, but we currently make too many errors enforcing our policies and preventing misuse of our tools. If we're successful this year then we'll end 2018 on a much better trajectory.” The acknowledgement came the same month it was revealed that Facebook had lost daily users for the first time ever in the U.S. and Canada.

03/12/2018

Facebook Reaches 1 Billion Users

In September 2012, Facebook became one of the fastest growing websites in history when it reached one billion monthly active members.

“We belong to a rich tradition of people making things that bring us together,” Zuckerberg wrote, “we honor the humanity of the people we serve. We honor the everyday things people have always made to bring us together.”

It was not clarified whether the 83 million fake accounts were counted in the 1 billion user mark.

Facebook Acquires WhatsApp, an Encrypted Messaging App Popular with Criminals

Facebook bought WhatsApp for approximately $16 billion in order to capture the market for encrypted messaging on phones and the web. WhatsApp is another Facebook platform that became popular for criminals and other illicit actors to use to communicate. Since it is encrypted, it is difficult for law enforcement to track illegal behavior on the messaging service.

Facebook Begins to Take a Multi-Pronged Approach to Moderation

After years of treating content moderation as a customer support issue, Facebook in 2015 finally began to develop official policy towards toxic and illegal content. Monika Bickert, the Head of Global Policy Management, ran a team that defined policy. She would team up with Ellen Silver, who worked with the content moderators around the world, to implement those policies. Guy Rosen, Vice President for Product Management, would build proactive detection tools to find illicit content faster. They called themselves “the three-sided coin.”

Concerns Escalate as ISIS Weaponizes Social Media

The Islamic State expanded its audience and increased its funding by weaponizing social media platforms like Facebook, Twitter and YouTube. In a report documenting terrorism propaganda on social media, researchers found that ISIS released 38 unique pieces of content a day, surpassing some major commercial brands and news organizations. ISIS content ranged from short videos to full-length documents in a variety of languages that depicted anything from quiet civilian life to violent acts of war.

As Wired Magazine put it, social media had provided terror groups cheap, easy access to the minds and eyeballs of millions.

12/04/2015

First Reports of ISIS Selling Looted Antiquities on Facebook

In December 2015, the first reports emerged of ISIS selling looted antiquities on Facebook in order to raise money. Archeologists tracking the plunder of Iraq and Syria, as well as U.S. State Department officials, feared the antiquities trade was becoming a small, but significant revenue stream for the terror group.

Read about the emerging threat in this New Yorker article.

04/28/2016

Facebook Shares Hit New All-Time High as Wall Street Cheers Earnings

In April 2016, Facebook’s stock rose more than 7% on strong Q1 2016 earnings. It seemed the firm could do no wrong. “As long as the users are there, Facebook will find ways to monetize,” gushed Macquarie Research, a Wall Street analytics shop. “The company continues to excel at keeping users engaged while also improving ad formats, targeting and measurement for advertisers.”

01/27/2017

Outsourced Content Moderators Paid $1-4/hour

By March 2012, Facebook was outsourcing most content moderation through oDesk, a global freelancing platform that would later merge to become Upwork. Job postings made no mention of working for Facebook, and each hire had to sign a strict non-disclosure agreement as part of their brief training process. The moderators mainly came from Turkey, Mexico, the Philippines and India, and were paid between $1-4 an hour to view a stream of pictures, videos and wall posts that had been flagged by users.

Moderators worked on a decision tree, a version of which still exists today, that provided moderators three options: confirm the content was a violation (in which case it would be deleted); allow it to stay up; or escalate it to a higher level of moderation, which would refer the content to a Facebook employee in California, who would, if necessary, report it to the authorities.

A 17-page manual laid out exactly what could be confirmed or deleted by the team. Moderators described the job like wading through “a sewer channel,” with one reporting “pedophilia, necrophilia, beheadings, suicides, etc. … I left [because] I value my mental sanity." Much drug content, according to early rules, was allowed to stay up.

Facebook Pressured to Prevent Child Exploitation

By 2012, the rise of smartphones and location-oriented services made it easier than ever for predators to connect with children. Under pressure to prevent child exploitation, Facebook and other tech firms began to implement systems that blended automated scanners and human monitoring. But this type of screening increased moderation costs, since the complex software depends on relationship analysis and archives of real chats that led to sexual assaults. It was also imperfect. “I feel for every one we arrest, ten others get through the system,” said Special Agent Supervisor Jeffrey Duncan of the Florida Department of Law Enforcement of tips from Facebook and other companies.

09/26/2006

10/28/2003

Zuckerberg’s First Venture: Rating Women’s Looks

In November 2003 Mark Zuckerberg, then a sophomore at Harvard, wrote the code to create Facemash, a platform that allowed visitors to compare two female student pictures side by side, and vote on which woman was more attractive. To obtain content, Zuckerberg illegally hacked into protected areas of Harvard's computer network and copied private dormitory ID images. Facemash outraged many students, alarmed school administrators, and almost got Zuckerberg expelled “for breaching security, violating copyrights and violating individual privacy,” according to the Harvard Crimson. This was how Zuckerberg got his start.

06/09/2004

Content Moderators Waded through Toxic Content from the Very Start

A small customer support team, originally just five people, handled content moderation in Facebook’s early days, but there was never any formal, written policy towards toxic content. Even at the point when Facebook had reached five million users, “customer support was barely on Mark’s radar,” wrote Katherine Losse, Facebook’s 50th employee and a member of the original customer support team. Employees like her who handled content problems at Facebook were second class citizens, paid less than half what engineers earned and, as hourly employees, ineligible to receive benefits provided to those on salary.

Losse soon began to wonder if providing people the opportunity to publish whatever they wanted, whenever they wanted, would end up harming society. “Thousands of emails flooded our systems each day… The angst that flowed through onto my screen was overwhelming sometimes.”

Her team held daily discussions, deciding ad hoc what content would and would not be allowed. They grappled with cyberbullying, hate speech directed at blacks and gays, misogynistic attacks on female users, and images of dead bodies, often showing signs of torture. “After long discussion, we decided that if a group contained any threat of violence against a person or persons, it would be removed. One aspect of our jobs, then, became scanning group descriptions for evidence of death threats, and searching for pictures of dead people. This was the dark side of the social network, the opposite of the party photos with smiling college kids and their plastic cups of beer, and we saw it every day.”

Losse’s memoir of her time at Facebook, The Boy Kings is available to buy or in preview.

04/30/2009

Unlike Other Platforms, Facebook's `Porn Cops` Worked Manually

By 2009, Facebook had developed semi-formal policies towards pornographic and toxic content. Internally known as the “porn cops,” 150 of the firm’s 850 employees worked manually to moderate content and remove material that might trouble advertisers. They focused mainly on drug use, nudity and spammers, but only reviewed content that had been flagged to them by users.

Facebook’s archrival MySpace, by comparison, employed "hundreds" of moderators, and had developed proprietary software systems to proactively review every one of the 15M to 20M images uploaded to the site each day, not just the ones flagged by users.

In this period, Facebook also began responding to law enforcement requests to help solve crimes, reportedly receiving around 10-20 police requests per day.

10/17/2009

Facebook Reaches 300M, Becomes Cash Flow Positive

In a blog post on Facebook, Mark Zuckerberg announced that his platform has reached 300 million users and had become cash flow positive. "This is important to us because it sets Facebook up to be a strong independent service for the long term," Zuckerberg wrote. He didn’t mention how much the company earned, but CBC speculated that Facebook was earning hundreds of millions of dollars annually in advertising revenue.

10/06/2010

Facebook Launches Groups, Some Become Crime Havens

On October 6, 2010, Facebook launched the Groups feature, which allowed users to share content with specific circles of friends. Zuckerberg told the BBC news, "One of the things we have heard is that people just want to share information with smaller groups of people. It will enable people to share things that they wouldn't have wanted to share with all of their friends."

Multiple investigations, including internal ones at Facebook, have found that Groups -- in particular private and secret groups -- are the epicenter of hateful content and criminal activity that spread on Facebook. The firm also implemented an algorithmic tool that indexed each user’s social network to identify clusters of potential friends, recommending they form groups.

08/09/2011

Facebook Launches Messenger, Another Tool Attractive to Criminals

As more and more people began to purchase smartphones, Facebook launched Facebook Messenger, a separate app that allowed people to connect with their Facebook friends through texting and notifications. Facebook Messenger would go on to become a preferred tool for illicit actors, including drug traffickers, child pornographers and terrorists, to communicate and pass content.

04/09/2012

Facebook Buys Instagram for $1B

Facebook purchased Instagram, a popular photo sharing app, for about $1 billion in cash and stock. Founded in 2010, Instagram was one of the most downloaded apps on the iPhone and had roughly 30 million users. With just a handful of employees, Instagram was valued at about $500 million and had several prominent investors. Facebook’s purchase meant that the app doubled in value. This was Facebook’s largest acquisition to date.

05/18/2012

Facebook Goes Public

On May 18, Facebook went public, but despite considerable pre-launch hype, their stock closed at $38.23, just 23 cents more per share than it was priced the night before. However, more than 80 million shares changed hands in the first 30 seconds of trading and at the end of the day, it peaked at around 567 million shares. At $38 IPO, Facebook raised $16 billion, making it the third-highest IPO-day valuation in history.

08/01/2012

Number of Fake Accounts Reaches All Time High

In a regulatory filing, Facebook admitted that 83 million accounts, or 8.7% of its users, were fake. The filing described three categories of fake accounts: duplicate, misclassified and “undesirable.” The 14.3 million accounts that were classified “undesirable” were defined as those that participate in illicit or offensive activity such as scams, spam or crime.

08/21/2013

Facebook Launches Internet.org, Ostensibly for Humanitarian Reasons

On August 21, Mark Zuckerberg and other tech firms launched Internet.org, an initiative ostensibly aimed at increasing Internet access in developing countries and rural areas. Publicly, the initiative was launched for humanitarian purposes, but it also accelerated the firm’s growth. At the time, only 2.7 billion people around the globe had access to the Internet, and with 1 billion users already on Facebook, the platform was creating space to grow by increasing the available customer base beyond already saturated markets in North America and Europe.

The initiative allowed Facebook to provide its platform for free to users across the developing world, meaning that Facebook, in essence, became the Internet in those places. In some countries like the Philippines, Facebook has played a major role in spreading conspiracy theories that caused violence in real life. Maria Ressa, a prominent Filipino reporter and human rights activist later called Facebook the “fertilizer” of democratic collapse in the Philippines. Citing net neutrality and the rampant spread of misinformation, Internet.org was eventually banned in India.

The other founding members of the initiative were Ericsson, MediaTek, Nokia, Opera, Qualcomm and Samsung.

10/31/2014

Facebook Experiments with Encryption on its Website

On October 31st, Facebook allowed users of Tor, an anonymizing browser, access to its platform by launching a .onion address. In the past, it was tricky for Tor users to log into Facebook because of the way platform blocks hacked accounts. The .onion address - https://facebookcorewwwi.onion/ was an experiment in end-to-end encryption on the website.

08/24/2015

Facebook Reaches 1 Billion Daily Active Users

Mark Zuckerberg announced that "On Monday, 1 in 7 people on Earth used Facebook to connect with their friends and family.” Even as he celebrated the milestone, the Facebook CEO remained laser-focused on growth, saying this was “just the beginning of connecting the whole world.”

11/05/2015

Social Media Identified As Key Challenge to Stopping Illegal Trade in Cheetahs

Social media was identified as one of the key challenges to combat illegal trade in cheetahs by National Authorities of the Convention on International Trade in Endangered Species of Wild Fauna and Flora (CITES). Secretary General John E. Scanlon noted that the total population was estimated to number fewer than 10,000 cheetahs, most of them located in the savannas of Eastern and Southern Africa. The Gulf States are the primary destination for illegally traded cheetahs, where they are popular as pets, and routinely sold across Instagram.

03/2016

TRAFFIC Report Says Social Media Facilitates Wildlife Trade

A 2016 TRAFFIC report said, in brief, that Facebook "contribut[ed] to the expansion of all wildlife crime," that "sellers appear[ed] to operate with impunity”, "allow[ed] for a great deal of anonymity" and that "very little effort [was] made to conceal the illegal nature" of this trade.

11/16/2016

Concerns Emerge About the Illegal Wildlife Trade on Facebook

ACCO member, the Wildlife Justice Commission (WJC), hosted a conference highlighting a wildlife trafficking hub in Vietnam moving "industrial scale" illegal wildlife goods through social media, especially Facebook and WeChat.

The investigation found that wildlife products were being sold in closed or secret groups through auctions. New buyers or sellers had to be approved before being allowed to join the group. Payment was then usually done through WeChat Wallet or Facebook Pay.

“Social media provides a shopfront to the world,” said Olivia Swaak-Goldman, Executive Director of WJC.

Facebook did not attend the conference.

02/16/2017

Zuckerberg Says Facebook is Implementing Artificial Intelligence to Fight Toxic Content

In a lengthy Feb 2017 blog, Zuckerberg wrote that Facebook’s job was to “help people make the greatest positive impact while mitigating areas where technology and social media can contribute to divisiveness and isolation.” Acknowledging that “terribly tragic events” had broadcast on his family of platforms, he declared that “artificial intelligence (AI) can help provide a better approach.” He claimed that “it will take many years to fully develop these systems,” but said in some sectors, AI “already generates about one-third of all reports to the team that reviews content for our community.” He also declared that “keeping our community safe does not require compromising privacy,” claiming that, “since building end-to-end encryption into WhatsApp, we have reduced spam and malicious content by more than 75%.” Content problems stemmed mainly from “operational scaling issues,” he wrote, adding that, “when in doubt, we always favor giving people the power to share more.”

05/02/2017

Facebook Internal Rulebook Leaked, Showing Animal Abuse Content is Allowed

In May 2017, the Guardian published an exposé on Facebook’s secret rules and guidelines for deciding what its 2 billion users could post on the site. More than 100 internal training manuals, spreadsheets and flowcharts exposed oft-contradictory guidance, such as leaving up images of animal abuse in order “to raise awareness and condemn the abuse.” But employees quoted in the story said the daily deluge of toxic content had overwhelmed the platform. “Facebook cannot keep control of its content,” said one source quoted in the story. “It has grown too big, too quickly.”

11/01/2017

Founding Members of ACCO File Complaint With SEC Over Illicit Wildlife Content on Facebook

Founding members of ACCO filed an anonymous whistleblower complaint against Facebook with the U.S. Securities and Exchange Commission alleging the firm was knowingly profiting from the trafficking of endangered species.

The complaint, filed with the SEC in August of 2017, alleged that Facebook failed to implement the necessary and required internal controls to curtail criminal activity occurring on Facebook’s social media pages. The complaint explained how Facebook is engaged in the business of selling advertisements on the web pages it knew or should have known were being used by traffickers to market endangered species and animal parts.

“Extinctions are forever so it is an urgent necessity to stop the trafficking on Facebook of critically endangered species immediately and forever,” said chief attorney Stephen M. Kohn.

12/27/2017

Moderating Facebook Called “Worst Job in Technology”

A Wall Street Journal report describes moderators struggling with mental health issues as they “stare at human depravity” to keep it off Facebook. “I was watching the content of deranged psychos in the woods somewhere who don’t have a conscience for the texture or feel of human connection,” said a moderator. After ProPublica ran a study on hate speech, finding that content moderators made the wrong call on almost half the cases they flagged, Facebook issued a statement: "We're sorry for the mistakes we have made," said Facebook VP Justin Osofsky in a statement. "We must do better."

Read the WSJ report. Read the ProPublica study.

03/07/2018

Facebook Pledges to Reduce Wildlife Crime by 80%

More than 30 tech firms, including Facebook and Google, joined the Global Coalition to End Wildlife Trafficking Online, pledging to reduce the illegal online trade in ivory and other wildlife products by 80 percent by 2020. Facebook would fail to achieve this pledge.

03/17/2018

Cambridge Analytica Scandal Revealed

On March 17 2018, the Guardian and the New York Times jointly revealed that Cambridge Analytica harvested the data of 50M Facebook users without their permission to support then candidate Donald Trump in his presidential election campaign. A former Cambridge employee-turned-whistleblower Christopher Wylie claimed the firm also helped Conservatives swing the outcome of the UK 's Brexit referendum using Facebook data. The scandal led to multiple inquiries in the US and the UK, swallowed $36B of Facebook’s market share within 48 hours, and prompted a viral #deleteFacebook campaign.

In a blog post, Facebook insisted that, “Protecting people’s information is at the heart of everything we do.”

03/20/2018

The FTC Launches an Inquiry Over Privacy Protections

The Federal Trade Commission (FTC) opened an investigation into whether Facebook had violated a settlement reached with the U.S. government agency in 2011 over protecting user privacy. Zuckerberg later posted on Facebook, “We will learn from this experience to secure our platform further and make our community safer for everyone going forward.”

03/25/2018

Zuckerberg Says Sorry

Zuckerberg took out ads in almost all of Britain’s major newspapers, as well as major American newspapers, including The New York Times, The Washington Post and the Wall Street Journal, to apologize for the Cambridge Analytica scandal. “This was a breach of trust, and I’m sorry we didn’t do more at the time. We’re now taking steps to ensure this doesn't happen again,” he said. “I promise to do better for you.”

04/05/2018

Facebook Claims it Removed 99% of Terror Content

In response to a media report that showed big name brands’ advertisements next to extremist content on YouTube, Facebook began to roll out a carefully-worded statement implying they removed 99% of terrorist content.

In one example, COO Sheryl Sandberg told NPR: "There’s no place for terrorism on our platform. We've worked really hard on this — 99 percent of the ISIS content we're able to take down now, we find before it's even posted. We've worked very closely with law enforcement all across the world to make sure there is no terrorism content on our site. And that's something we care about very deeply."

Five days later, Zuckerberg testified in Congress that the firm’s AI systems “can identify and take down 99% of ISIS content before people even see it." But he admitted the numbers are lower with regards to drug content. "What we need to do is build more AI tools that can proactively find that content," Zuckerberg said. "There are a number of areas of content that we need to do a better job."

04/10/2018

Zuckerberg Testifies in Congress Over Cambridge Analytica

In April, the Facebook CEO admitted to Senators: “It’s clear now that we didn’t do enough to prevent these tools from being used for harm. That goes for fake news, foreign interference in elections, and hate speech, as well as developers and data privacy. It's not enough to just connect people. We have to make sure that those connections are positive. It's not enough to just give people a voice. We need to make sure that people aren't using it to harm other people or to spread misinformation.”

In a separate hearing in the House, lawmakers from both parties grilled Zuckerberg about illegal drug, wildlife, and counterfeit content on Facebook. Zuckerberg responded that he aimed to have 20,000 moderators tracking illegal activity and toxic content within a year.

Read Zuck’s Senate testimony here. Read about Congressional concerns over the illegal activity on Facebook here and here.

04/24/2018

Facebook Publishes Community Standards for the First Time

"We want to give people clarity," said Monica Bickert, Facebook's VP of Global Policy Management. "We think people should know exactly how we apply these policies. If they have content removed for hate speech, they should be able to look at their speech and figure out why it fell under that definition.”

9/05/2018

Facebook COO Sheryl Sandberg Says Facebook Investing in Security

Facebook COO Sheryl Sandberg told the Senate: "We’re investing heavily in people and technology to keep our community safe and keep our service secure…When we find bad actors, we will block them. When we find content that violates our policies, we will take it down."

Facebook executives would roll out versions of this carefully worded statement multiple times to lawmakers and regulators, but never provided specifics as to how they planned to remove toxic and illicit content.

12/18/2018

Facebook Shared More Personal Data Than It Had Disclosed

After promising lawmakers in April that Facebook users would have “complete control” over how their data was used, it emerged that Facebook had granted access since 2010 to users' private messages, address book contents, and private posts, without the users' consent, to more than 150 third parties including Microsoft, Amazon, Yahoo, Netflix, and Spotify. This had been occurring despite public statements from Facebook that it had stopped such sharing years earlier. “We know we’ve got work to do to regain people’s trust,” said Steve Satterfield, Facebook’s Director of Privacy and Public Policy.

01/05/2019

Whistleblower Says 99% Removal Rate Claim Was False

In a petition to the Securities and Exchange Commission filed in January 2019, and updated in April 2019, an anonymous whistleblower delivered an analysis showing that Facebook was removing less than 30% of users who identified themselves as members of designated terrorist groups, a rate far lower than the 99% removal rate Facebook routinely claimed. The petition alleged that, not only was Facebook failing to block terror content, but it was in fact creating new terror content on its platforms through its auto-generation features. The petition delivered similar findings about hate content on Facebook platforms.

03/01/2019

Facebook Moderators Sue Over Mental Distress

Facebook content moderators filed a class action lawsuit against Facebook, alleging they suffered psychological trauma and symptoms of post-traumatic stress disorder caused by reviewing violent images on the social network. "The fact that Facebook does not seem to want to take responsibility, but rather treats these human beings as disposable, should scare all of us," said Steve Williams, a lawyer representing the content moderators. Facebook fought to have the case dismissed, but later settled for $52M.

03/06/2019

Zuckerberg Launches Another Pivot to Privacy

The Facebook founder announced at the annual F8 conference that he planned to encrypt his entire family of platforms, and move all activity into Groups. "As I think about the future of the Internet, I believe a privacy-focused communications platform will become even more important than today's open platforms."

03/15/2019

Christchurch Massacre, Killing 50, is Livestreamed on Facebook

It took 29 minutes and more than 4,000 views before Facebook removed the Christchurch massacre livestream, which Facebook’s weak AI had failed to identify on its own since it was filmed from the shooter's POV. In the first 24 hours after the gruesome attack, which went viral, FB admitted to removing 1.5M copies.

04/24/2019

Zuckerberg Touts Facebook's Capacity to Remove Terror Content

In an earnings call, Zuckerberg responded to concerns raised by investors about his firm’s capacity to remove violent and illicit content, again utilizing a carefully-worded claim. "In areas like terrorism, for Al Qaeda and ISIS related content, now 99 percent of the content that we take down in the category, our systems flag proactively before anyone sees it. That’s what really good looks like."

He deployed the same careful wording in a speech months later to Georgetown University saying, “We build specific systems to address each type of harmful content — from incitement of violence to child exploitation to other harms like intellectual property violations — about 20 categories in total. We judge ourselves by the prevalence of harmful content and what percent we find proactively before anyone reports it to us.” In other words, Facebook wasn’t grading itself for – nor even revealing – the overall amount of toxic and illegal content it could find and remove. Critics suggested such an approach would incentivize the firm to remove as little user-flagged content as possible, thus allowing the firm to artificially maintain a high rate of removal.

05/09/2019

Facebook Co-Founder Calls for Firm to Be Broken Up

Chris Hughes, who co-founded Facebook with Mark Zuckerberg back when they both attended Harvard, published an editorial in the New York Times condemning Zuckerberg’s control over 60% of Facebook voting shares, saying his influence was dangerous. “The government must hold Mark accountable,” Hughes wrote.

06/15/2019

Facebook Announces Libra, a Cryptocurrency Association

Facebook formally launched Libra, a cryptocurrency association backed by 27 different partners including MasterCard and Uber. The digital currency was to be fixed on several different traditional currencies including the dollar and the euro, and to be given instant access to Facebook’s 2 billion users. Facebook executives claimed they were creating Libra to help the poor and unbanked, who don’t have access to brick and mortar banks or credit cards. However, the New York Times speculated that creating a financial network would help Facebook find new revenue in the event of a decline in ad revenue.

U.S. legislators were immediately critical of Libra, citing concerns about privacy, competition and the ability to prevent money laundering and other criminal activity. Senator Sherrod Brown, the top Democrat on the Senate Banking Committee, blasted Facebook as “dangerous,” adding that it would be “crazy to give them a chance to experiment with people’s bank accounts.” The digital currency was to be launched in 2020 but has since been postponed.

07/12/2019

FTC Fines Facebook $5B; Zuckerberg Actually Profits

The Federal Trade Commission charged Facebook a massive $5B fine in the wake of the Cambridge Analytica scandal to settle the charge that it broke a 2012 FTC order concerning the privacy of user data. As part of the FTC settlement, Facebook had to agree to new rules for managing user data, to create a new independent privacy committee and to receive reports from an independent privacy "assessor," appointed to monitor Facebook's actions. Critics and media reports said the fine - although the largest in FTC history - was so weak that Facebook stock actually increased, ironically making Zuckerberg richer.

10/03/2019

US, UK, Australia Warn Facebook Against Encryption

The United States, United Kingdom, and Australian governments sent an open letter to Facebook in response to the company’s publicly announced plans to implement end-to-end-encryption across its messaging services.

“Security enhancements to the virtual world should not make us more vulnerable in the physical world. We must find a way to balance the need to secure data with public safety and the need for law enforcement to access the information they need to safeguard the public, investigate crimes, and prevent future criminal activity. Not doing so hinders our law enforcement agencies’ ability to stop criminals and abusers in their tracks,” the open letter said.

The US National Center for Missing and Exploited Children (NCMEC) has estimated that 70% of Facebook’s reporting - 12 million reports globally - on child exploitation would be lost if Facebook went ahead with plans to encrypt Messenger.

11/01/2019

Facebook Releases Second Transparency Report

The firm admitted to "acting on" 4.4M pieces of drug content in Q3 2019, as well as 11.6 million pieces of child sex exploitation content. To put that second number in perspective, it's about the population of Ohio. Facebook didn’t define what “acting on” means.

03/15/2020

A Year Later, Christchurch Videos Still Circulate on Facebook

A year after the Christchurch massacre, copies of the attack could still be found on Facebook platforms. Of eight videos found by the Tech Transparency Project, all of them were made active shortly after the shooting, highlighting Facebook’s incapacity or unwillingness to remove violent content from its platform, even when there’s intense global scrutiny.

05/27/2020

ACCO Files Major SEC Complaint

Based on evidence provided by confidential whistleblowers, ACCO filed another update to its SEC Complaint. In it, ACCO detailed how Facebook pressured moderators not to remove illegal and toxic content, misled the public and investment community about its commitment to removing this content and consistently made business choices that facilitated criminal activity across its family of platforms.

06/17/2020

Facebook Loses Advertisers Over Hate Content

The #StopHateforProfit Campaign convinced more than 1000 major advertisers to pause advertising on Facebook in July 2020, or until the firm addressed civil rights issues and hate content. After meeting with Zuckerberg and Sandberg, campaigners said Facebook’s response amounted to little more than “spin,” as the firm agreed to none of their ten demands. The same week, an independent audit found that Facebook didn’t do enough to fight discrimination on its platform and had made decisions that represented “significant setbacks for civil rights.” Sandberg acknowledged it had “become increasingly clear that we have a long way to go.”

Even though it was the biggest Facebook boycott to date, the firm's stocks nonetheless hit record highs.

6/23/2020

Facebook Bans the Sale of Historical Artifacts

In June 2020, Facebook banned the sale of antiquities on its platforms. While this move marked a major policy shift, the company has done precious little to enforce such policies historically. Since antiquities trafficking is a war crime, the ATHAR Project called on Facebook to preserve data it held of plundered objects, rather than delete it.

08/13/2020

Fake Review Marketplace Still Thriving on Facebook

Facebook groups arranging fake Amazon product reviews continue to thrive, according to U.K. consumer advocate Which? and the Financial Times. Which? researchers reported that although Facebook had removed some groups involved in arranging fake reviews, overall activity remained unchanged. Facebook had signed an agreement in January 2020 with the United Kingdom's Competition and Markets Authority (CMA) pledging to curb the fraudulent review trade on its platforms.

Amazon isn't the only platform being gamed in the Facebook fake review marketplace. ACCO researcher Kathryn Dean has uncovered thousands of fake reviews being arranged in Facebook groups for Google, Yelp, and multiple other review sites. See her op-ed on the problem here.

Did Zuckerberg Steal the Original Idea for Facebook?

A few months later, in February 2004, Zuckerberg was back with a new venture: thefacebook.com. Named after a Harvard directory for first-year students, the website allowed students to create personal profiles and connect with other students through courses, social organizations and Houses.

Zuckerberg was later accused of stealing the idea for Facebook from another Harvard student, who had launched a similar system internal to the college in September 2003. In leaked chats, he admitted to intentionally delaying work on a separate dating site that he was also helping to code, so that he could launch and promote his platform first, thus winning first mover advantage. He even joked that he knew this behavior was unethical, maybe borderline illegal.

Read the first article written about Facebook, “Hundreds Register for New Facebook Website,” by the Harvard Crimson. Read about Zuckerberg’s early legal battles in “The Battle for Facebook.”

03/20/2005

Zuckerberg Ignored Flaws in Facebook Until Exposed

Leaked messages and court records show that Zuckerberg was obsessed with media hype to bolster growth, and from early on repaired security problems on his platform only when they were exposed in the media, not when he was initially made aware of them.

In March 2005, for example, he received an email from fellow Harvard student Aaron Greenspan warning him that there was a security problem related to data exports that put at risk data for students at 435 colleges. Zuckerberg responded immediately to the email, but days later had not bothered to address the problem. He did not fix the code until the following month, after Greenspan issued a press release about the flaw, and college newspapers at Harvard and Yale reported the story. In chat conversations with Greenspan, Zuckerberg appeared more concerned that negative publicity would cause “hysteria” than thousands of people could have had their private data leaked.

07/2005

Facebook Opens to Other Colleges

Initially only available to Harvard students, the founders soon opened up the Facebook to other universities. It grew at an astonishing pace, reaching 1 million users less than a year after launching. "When it took off at Harvard I thought it'd be cool to make it a multi-school thing," Zuckerberg told Wired Magazine.

Net Profit $0

Users 2k

Moderators 0